Abstract

This post details a Proof of Concept (PoC) originally developed a few years ago as I began my journey into hardware-assisted virtualization and AMD SVM. It serves as a technical archive of my learning process, born from a deep dive into the AMD Architecture Programmer’s Manuals and various online resources.

To bridge the gap between theory and implementation, the core structure of this hypervisor was built by analyzing and referencing open-source projects like AetherVisor and SimpleSVM. These resources were instrumental in helping me translate documentation into practical knowledge.

The sole objective of this project was education and technical curiosity. All techniques discussed are documented strictly for academic and research purposes. I do not encourage or condone the use of these methods for any malicious activity; this is simply a look back at a foundational learning project.

1. Introduction: The Frontier of “Ring -1”

In the adversarial realms of malware analysis, anti-cheat development, and offensive security, traditional Kernel-level (Ring 0) access faces diminishing returns. The evolution of Windows security mitigations—specifically PatchGuard (KPP) and Kernel Mode Code Integrity (KMCI)—has rendered classic techniques like SSDT or Inline Hooking both hazardous and trivially detectable. Consequently, the frontier of deep system introspection has shifted to “Ring -1” (virtualization mode).

This post details the architecture and development of Horus, a custom Type-2 Hypervisor for AMD64 processors using Secure Virtual Machine (SVM) technology. We will explore the journey of transparently virtualizing a running Windows system, moving beyond standard driver development into the complexities of processor microarchitecture and hardware exception handling. Technical highlights include the implementation of Nested Page Tables (NPT) for undetectable memory hooking and a stealthy Syscall Tracing mechanism based on exception injection.

Disclaimer: This project and the techniques discussed herein are strictly for education and research purposes regarding internal AMD architecture and the Windows Kernel.

2. Architecture & The “Blue Pill” process

The lifecycle of Horus begins as a standard Windows driver. Its primary goal is to perform the “Blue Pill” transition: migrating the running OS into a virtual machine on the fly.

2.1. System Virtualization Loop

The entry point, virtualize_system, iterates over every logical processor. Since the Guest state is local to each core, Horus must perform a strict initialization sequence for each one.

- Context Capture: We capture the current processor state (

RtlCaptureContext) to ensure a seamless transition. - VMCB Allocation: We allocate and clear the Virtual Machine Control Block (VMCB). This 4KB structure dictates the CPU behaviour, defining which events (like

CPUIDorMSRwrites) trigger a#VMEXIT. - Host State Setup: The

VM_HSAVE_PAMSR is configured to point to a physical memory region where the processor saves the Host state before running the Guest. - Launch: The driver invokes

vm_launch(an assembly routine), which executes VMRUN. At this precise moment, the CPU promotes the driver to Host Mode (Ring -1) and demotes the running OS to Guest Mode.

2.2. Validating the State

Before virtualization, Horus rigorously validates the system state against AMD’s manual requirements (checking EFER, CR0, CR3 and CR4 combinations). If the Guest state is “illegal” (e.g., specific reserved bits are set), the VMRUN instruction would fail, potentially causing a system crash. Horus prevents this by pre-validating most of these conditions.

AMD64 Architecture Programmer’s Manual, Volume 2:

Illegal guest state combinations cause a #VMEXIT with error code VMEXIT_INVALID. The following conditions are considered illegal state combinations (note that some checks may be subject to VMCB Clean field settings, see below):

- EFER.SVME is zero.

- RCR0.CD is zero and CR0.NW is set.

- CR0[63:32] are not zero.

- Any MBZ bit of CR3 is set.

- Any MBZ bit of CR4 is set.

- DR6[63:32] are not zero.

- DR7[63:32] are not zero.

- Any MBZ bit of EFER is set.

- EFER.LME and CR0.PG are both set and CR4.PAE is zero.

- EFER.LME and CR0.PG are both non-zero and CR0.PE is zero.

- The VMRUN intercept bit is clear.

- ASID is equal to zero.

3. Memory Introspection: “Split-View” NPT Hooking

Memory management is arguably the most complex aspect of a hypervisor. While standard paging translates Virtual Addresses (VA) to Physical Addresses (PA), virtualization adds a second layer: Guest Physical Addresses (GPA) to Host Physical Addresses (HPA) using Nested Page Tables (NPT).

Since standard AMD64 paging lacks a native “Execute-Only” permission bit (meaning any page marked “Present” is inherently readable), we cannot simply hide our hooks within a single memory mapping. To circumvent this, Horus leverages NPT to implement Split-View Hooking, a technique that bypasses PatchGuard integrity checks by showing one version of memory for reading and a different version of execution.

3.1. The Dual Hierarchy

Horus constructs two distinct NPT hierarchies:

- Primary NPT (Read View): This is the default table. It maps the guest physical memory 1:1 but marks our target hook pages as Non-Executable (NX). If Windows reads these pages to check for integrity, it sees the original, unmodified bytes.

- Shadow NPT (Execute View): This table is mostly Non-Executable, except for the specific pages we have hooked, which are marked Executable. These pages contain our shellcode or modified functions.

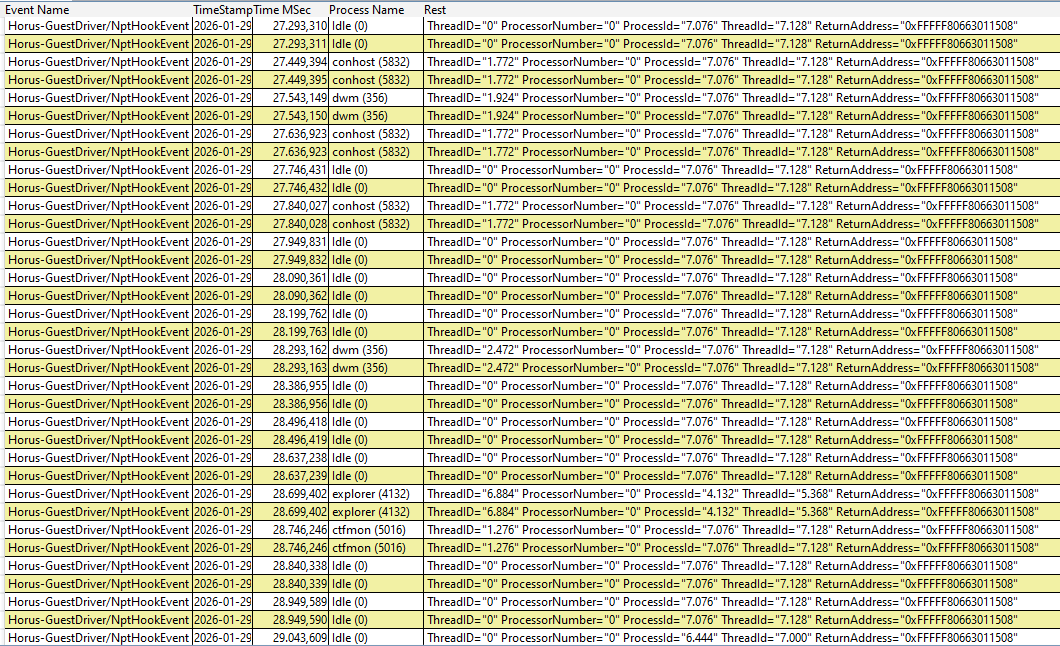

This simple diagram illustrates the two NPT hierarchies used by Horus.

3.2. The Trapping Mechanism (#NPF)

The switching mechanism relies on the Nested Page Fault (#NPF) exception. Instead of relying on complex Single Stepping (#DB) flags, like TF or MTF, Horus leverages the page fault mechanism to switch views dynamically:

- Execution Trap: When the OS attempts to execute code we hooked, the Primary NPT (marked

NX) triggers a#VMEXIT(NPF). - The Switch: Horus intercepts the fault, detects that the instruction pointer (

RIP) matches our target, and updates the VMCB’snCR3to point to the Shadow NPT. - Execution: The CPU resumes, now using the Shadow NPT where the code is executable. The payload/hook runs.

- Restoration: Once execution flows out of the hooked page into adjacent memory, another

#NPFoccurs (since the Shadow NPT maps the rest of memory asNX). The handler catches this and swaps back to the Primary NPT, closing the loop.

To the OS, the memory looks clean (Read View), but the CPU executes our modified code (Execute View). This “Ping-Pong” approach ensures robust isolation without the performance overhead of instruction-level tracing.

void horus::vmm::npt::npt_exit_handler( VcpuData* vcpu )

{

EXITINFO1 exit_info1 = { 0 };

exit_info1.AsUInt64 = vcpu->guest_vmcb.control_area.exit_info1;

uint64_t guest_rip = vcpu->guest_vmcb.save_state_area.rip;

...

// #NPF thrown due to execution attempt of a non-executable page

if ( exit_info1.Fields.Execute == 1 )

{

auto rip_page = PAGE_ALIGN( guest_rip );

bool is_hooked_page = false;

// Iterate over all hooks and check if the RIP matches any of them.

for ( int i = 0; i < g_npt_state.hook_count; i++ )

{

auto& hook = g_npt_state.hook_pool[ i ];

if ( hook.function_address == reinterpret_cast< void* >( guest_rip ) ||

PAGE_ALIGN( hook.function_address ) == rip_page )

{

is_hooked_page = true;

break;

}

}

if ( is_hooked_page )

{

// Switch to shadow NPT where the hooked page is executable and the target function contains our shellcode.

vcpu->guest_vmcb.control_area.ncr3 = vcpu->npt_shared_data->shadow_npt_cr3;

}

else

{

// Switch back to primary NPT to resume normal execution.

vcpu->guest_vmcb.control_area.ncr3 = vcpu->npt_shared_data->primary_npt_cr3;

}

// Clear bit 4 of vmcb_clean, indicating CR3 and TLBControl have been changed.

vcpu->guest_vmcb.control_area.vmcb_clean &= 0xFFFFFFEF;

// Invalidate all TLB entries.

vcpu->guest_vmcb.control_area.tlb_control = 3;

}

}

Live stress-test of the Split-View NPT mechanism. The logs show the hypervisor successfully intercepting execution attempts of NtDeviceIoControlFile from critical system processes like dwm.exe (Desktop Window Manager) and explorer.exe.

4. Stealthy Syscall Tracing

Traditional syscall hooking (modifying LSTAR MSR or the System Service Descriptor Table) is a guaranteed way to trigger PatchGuard. Horus bypasses this by modifying the hardware’s behaviour rather than the OS’s data structures.

4.1. The EFER.SCE bit Trick

In x64 architecture, the SYSCALL instruction is only valid if the SCE (System Call Enable) bit in the EFER register is set. Horus clears this bit in the Guest’s state and intercepts reads/writes to EFER via the MSR Bitmap.

- To the Guest: We report that SCE is enabled (

1). - To the Hardware: We silently force SCE to be disabled (

0).

4.2. Emulation via #UD

When a process executes SYSCALL:

- The CPU throws a

#UD(Undefined Opcode) exception because the SCE bit is physically disabled. - Horus intercepts this

#UDexception before the Windows IDT sees it. - The handler verifies the opcode is

0F 05(SYSCALL). - Emulation: The hypervisor manually updates

RIP,RFLAGS,CS,SSandRCX, effectively mimicking theSYSCALLinstruction in software.

bool horus::vmm::syscalls::emulate_syscall( VcpuData* vcpu, GuestRegisters* guest_context )

{

...

// Check whether we are in long mode or compatibility mode

// and set RIP to LSTAR or CSTAR accordingly

if ( vcpu->guest_vmcb.save_state_area.cs_attrib.fields.long_mode == 0 &&

vcpu->guest_vmcb.save_state_area.cs_attrib.fields.default_bit == 1 ) {

// 32 bit mode

vcpu->guest_vmcb.save_state_area.rip = __readmsr( MSR_CSTAR );

}

else if ( vcpu->guest_vmcb.save_state_area.cs_attrib.fields.long_mode == 1) {

// 64 bit mode

vcpu->guest_vmcb.save_state_area.rip = __readmsr( MSR_LSTAR );

}

else {

...

}

// RCX.q = next_RIP

guest_context->rcx = guest_rip + instruction_length;

// R11.q = RFLAGS with rf cleared

guest_context->r11 = vcpu->guest_vmcb.save_state_area.rflags.Flags;

// RFLAGS = RFLAGS AND ~MSR_SFMASK

// RFLAGS.RF = 0

uint64_t msr_fmask = __readmsr( MSR_FMASK );

vcpu->guest_vmcb.save_state_area.rflags.Flags &= ~( msr_fmask | X86_RFLAGS_RF );

// Adjust CS and SS segments

uint64_t msr_star = __readmsr( MSR_STAR );

// CS.sel = MSR_STAR.SYSCALL_CS AND 0xFFFC

vcpu->guest_vmcb.save_state_area.cs_selector = ( uint16_t )( ( msr_star >> 32 ) & ~3 );

// CS.base = 0

vcpu->guest_vmcb.save_state_area.cs_base = 0;

// CS.limit = 0xFFFFFFFF

vcpu->guest_vmcb.save_state_area.cs_limit = 0xFFFFFFFF;

// CS.attr = 64-bit code,dpl0

vcpu->guest_vmcb.save_state_area.cs_attrib.fields.type = 0xB;

vcpu->guest_vmcb.save_state_area.cs_attrib.fields.system = 1;

vcpu->guest_vmcb.save_state_area.cs_attrib.fields.dpl = 0;

vcpu->guest_vmcb.save_state_area.cs_attrib.fields.present = 1;

vcpu->guest_vmcb.save_state_area.cs_attrib.fields.long_mode = 1;

vcpu->guest_vmcb.save_state_area.cs_attrib.fields.default_bit = 0;

vcpu->guest_vmcb.save_state_area.cs_attrib.fields.granularity = 1;

// SS.sel = MSR_STAR.SYSCALL_CS + 8

vcpu->guest_vmcb.save_state_area.ss_selector = ( uint16_t )( ( ( msr_star >> 32 ) & ~3 ) + 8 );

// SS.base = 0

vcpu->guest_vmcb.save_state_area.ss_base = 0;

// SS.limit = 0xFFFFFFFF

vcpu->guest_vmcb.save_state_area.ss_limit = 0xFFFFFFFF;

// SS.attr = 64-bit stack,dpl0

vcpu->guest_vmcb.save_state_area.ss_attrib.fields.type = 3;

vcpu->guest_vmcb.save_state_area.ss_attrib.fields.system = 1;

vcpu->guest_vmcb.save_state_area.ss_attrib.fields.dpl = 0;

vcpu->guest_vmcb.save_state_area.ss_attrib.fields.present = 1;

vcpu->guest_vmcb.save_state_area.ss_attrib.fields.default_bit = 1;

vcpu->guest_vmcb.save_state_area.ss_attrib.fields.granularity = 1;

// CPL = 0

vcpu->guest_vmcb.save_state_area.cpl = 0;

return true;

}- Tracing: Before resuming, we can inspect the registers (syscall index in

RAX, arguments inRCX,RDX, etc.) to log the activity of specific processes.

Horus also handles the SYSRET instruction similarly, ensuring the return path to User Mode is equally controlled and stable.

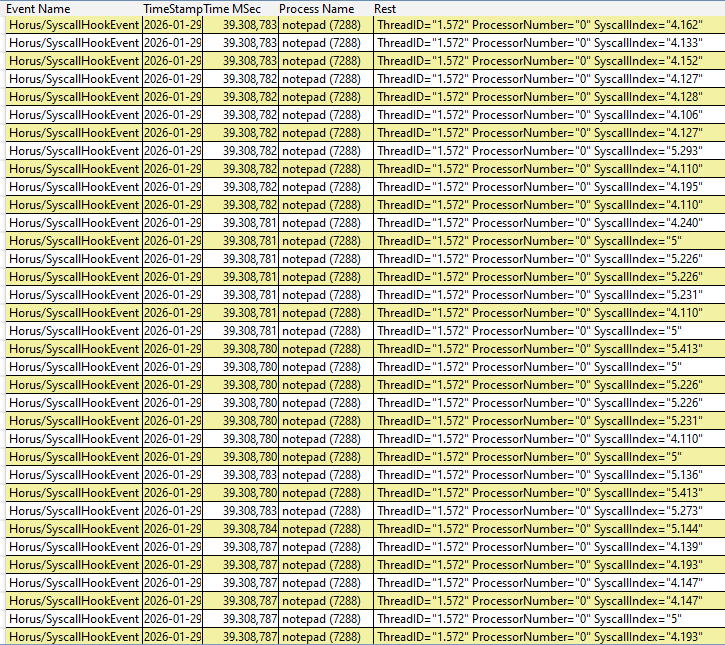

Real-time tracing of notepad.exe. The hypervisor intercepts the syscalls via #UD injection, resolves the origin process using its CR3 register, and logs the event.

4.3. The Cost of Transparency: Performance

While the #UD injection technique offers superior stealth compared to MSR hooking, it introduces significant overhead. A native SYSCALL instruction executes in mere nanoseconds. However, forcing a #VMEXIT and handling the subsequent exception in the hypervisor incurs a cost of thousands of CPU cycles per call. This makes this technique ideal for surgical tracing of specific processes (filtered by CR3), but potentially unsuitable for system-wide monitoring on production workloads.

5. Stealth & Communication

5.1. CPUID Virtualization

The CPUID instruction is the most common method for software to detect a hypervisor. To avoid detection by malware checking for “Hypervisor Present” bits:

- Horus intercepts every

CPUIDexecution. - It clears the Hypervisor Present Bit (Leaf

0x1,ECXbit 31). - It can return a custom Vendor String (acting as a “secret handshake”) to authorized clients while appearing as a standard AMD processor to the OS.

5.2. VMMCALL Interface

Communication between the standard kernel driver (or a user-mode client) and the Hypervisor is handled via VMMCALL. This instruction triggers an immediate exit, allowing us to issue commands such as:

PING: Verify hypervisor presence.PLACE_NPT_HOOK: Install a hook on a specific physical address.MONITOR_SYSCALL: Target a specific process (identified by CR3) for logging.

To invoke these commands from the Guest, we rely on a custom assembly wrapper that executes the vmmcall instruction. This wrapper acts as a bridge, passing arguments directly to the Hypervisor via general-purpose registers (RCX, RDX, R8), bypassing standard Windows IOCTL mechanisms entirely.

svm_vmmcall proc frame

.endprolog

PUSHAQ ; Save all GPRs to stack

; This prevents the corruption of certain guest registers used during vm unload

vmmcall ; Trigger VMEXIT

POPAQ ; Restore GPRs after returning from Host

ret ; Return to the caller

svm_vmmcall endp6. Stability and Clean-up

Writing a hypervisor requires handling system stability with extreme care.

- Atomic Unload: The cleanup routine uses Inter-Processor Interrupts (IPIs) to synchronize all cores. A critical, often overlooked step is the manual restoration of the Host’s GDTR, IDTR, and segment registers (TR, FS, GS) to match the Guest’s state before executing vmoff. Without this synchronization, the driver would return to Ring 0 with a corrupted processor context—creating a unstable “Zombie Driver” that inevitably crashes the system. Once the state is consistent, Horus disables SVM, tears down the NPTs, and frees all allocations, ensuring a zero-leak exit.

- Exception Injection: Horus correctly reflects exceptions back to the Guest. If an exception occurs that isn’t related to our virtualization logic, it is injected back into the Guest state (eventually reaching the Windows IDT). This ensures the OS can handle standard faults, such as genuine Page Faults, normally.

void VcpuData::inject_exception( int vector, bool deliver_error_code, uint32_t error_code )

{

EVENT_INJECTION event_injection = { 0 };

event_injection.vector = vector;

event_injection.type = SVM_EVENT_TYPE_EXCEPTION;

event_injection.valid = 1;

event_injection.push_error_code = deliver_error_code ? 1 : 0;

event_injection.error_code = deliver_error_code ? error_code : 0;

this->guest_vmcb.control_area.event_inject = event_injection.fields;

}7. Conclusion

Developing Horus has been a rigorous exercise in low-level system engineering. It demonstrates a fundamental truth: at the silicon level, the Operating System is just another application.

By moving the battleground to Ring -1, we achieve a level of introspection far superior to traditional kernel drivers. The combination of Split-View NPT Hooking and Exception-based Syscall Emulation allows us to manipulate execution flow and monitor system activity without triggering Windows’ integrity protection mechanisms like PatchGuard.

While this project is strictly an academic Proof of Concept, the foundations laid here—handling the VMCB, managing physical memory translation, and virtualizing hardware exceptions—are the exact mechanisms employed by both advanced enterprise security solutions and sophisticated state-sponsored rootkits.

Acknowledgments & References

The full source code for Horus, including the hypervisor driver and the guest-driver controller, is available on GitHub. I invite fellow researchers to review, fork, and contribute to the project.

This project stands on the shoulders of giants. I would like to credit the foundational research by Daax and Nick Peterson. Although their work focuses on Intel VT-x, it provided the conceptual framework and deep insights into hardware-assisted virtualization that I adapted to build this AMD SVM implementation.

In addition, I would like to thank all the researchers and developers who have contributed to the open-source community, with enlightening projects such as AetherVisor and SimpleSVM. Their work was instrumental in helping me translate documentation into practical knowledge.

- Daax

- Nick Peterson

- Satoshi Tanda

- Back Engineering

- Daax’s 5 Days to Virtualization

- Nick Peterson’s Syscall Hooking via EFER

- AMD-V Hypervisor Development - A Brief Explanation

- SimpleSVM

- AMD64 Architecture Programmer’s Manual, Volume 2

- AMD64 Architecture Programmer’s Manual, Volume 3

- AMD64 Secure Virtual Machine Architecture Reference Manual

— Redaa.